Systems General Overview

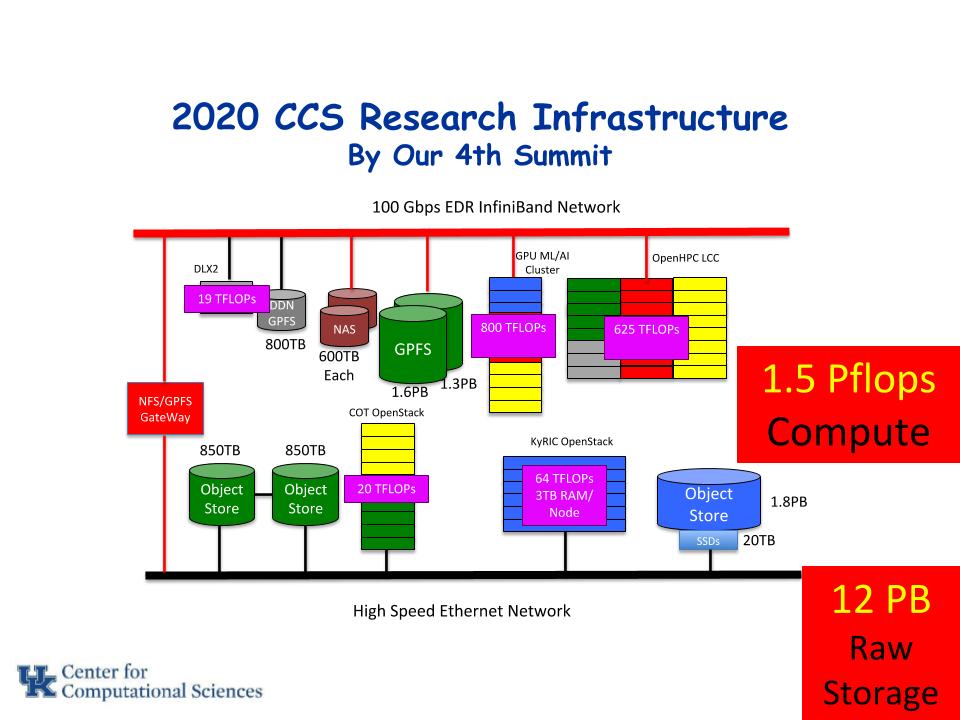

The center operates a $6 million central and distributed supercomputing facility containing nearly 16,000 processor cores and 120 high-performance graphics processing units with a combined peak performance of 1.5 petaflops. Hardware includes about 470 compute nodes with memory ranging from 64GB up to 3TB per node. Each of the compute nodes have between 16 to 48 compute cores. All these compute nodes are networked with an FDR and EDR Infiniband interconnect fabric. The high-performance GPFS parallel file systems attached to this central cluster contains 3 PB of usable disk storage for home, project and scratch directories. The cluster includes a dedicated backup node running IBM’s Tivoli Storage Manager (TSM) and Hierarchical Storage Manager (HSM) with a 10Gb link to UK’s central backup system providing access to the university’s near-line tape storage system. The facility has dedicated high speed 40Gb data transfer nodes for researchers needing to transfer data to and from external resources and offsite.

The center also provides programmable cloud infrastructure-as-a-service, managed cloud-native platforms and applications, consulting, and research and development efforts into new cloud computing technologies such as OpenStack deployments, distributed systems and interactive computing environments such as Jupyter notebooks. These computing environments fosters reproducible, sharable computing through containers and virtualization. These services are provided on 50 compute nodes, each powered by Intel processors (1600 cores) with 3TB RAM per node and supported by a 2PB usable object store storage system. A smaller OpenStack deployment (CoT OpenStack) has a mix of nodes (six 64-core, 500 GB RAM nodes and nine 40-core, 512 GB RAM nodes.)

Other high-performance computing resources are available to researchers through the Center for Computational Science as well. The National Science Foundation’s Extreme Science and Engineering Discovery Environment (XSEDE), the world’s largest distributed infrastructure for open scientific research, is a single virtual system that scientists can use to share computing resources, data, and expertise through a premier collection of integrated digital resources and services. Through the National Science Foundation’s Campus Champions program, UK researchers have on-campus consulting resources to submit allocation requests to XSEDE and to access powerful national supercomputing facilities for modeling, simulation, and advanced data and visualization analysis. In addition to allowing researchers to run their own custom code, most well-known major scientific codes are available through XSEDE resources. These resources are available at no cost to users.